Today we will learn on how to use spark within AWS EMR to access csv file from S3 bucket

Steps:

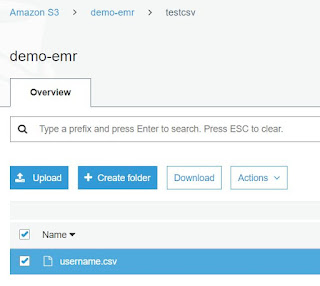

- Create a S3 Bucket and place a csv file inside the bucket

- SSH into the EMR Master node

- Get the Master Node Public DNS from EMR Cluster settings

- In windows, open putty and SSH into the Master node by using your key pair (pem file)

- Type "pyspark"

- This will launch spark with python as default language

- Create a spark dataframe to access the csv from S3 bucket

- Command: df.read_csv("<S3 path to csv>",header=True,sep=',')

- Type "df_show()" to view the results of the dataframe in tabular format

- You are done

No comments:

Post a Comment